blog.gladis.org

about linux, python and stuff ...

[2023-01-05] Speed up AUR builds

I just found out, that you can put your makepkg.conf in your home folder under .config/pacman/makepkg.conf

MAKEFLAGS="-j$(nproc)"

I just put this in my dotfiles repo and now each new Arch install will have faster AUR builds.

[2023-01-04] reboot into uefi setting (bios)

I boot via UEFI and can reboot into the "BIOS" setting with this command

systemctl reboot --firmware-setup

See the Arch wiki for more information.

[2021-02-03]

Install Pythonpackage from private git repository

1. setup privat libary repo

1.1. setup deploy key

$ ssh-keygen -t ed25519 -C "deploy_key_library" -f /tmp/deploy $ cat /tmp/deploy.pub

1.2. add deploy key to github https://github.com/hwmrocker/test_private_library/deploy_keys

2. setup app repo

2.1 add private key to github

$ cat /tmp/deploy

https://github.com/hwmrocker/test_action_build/settings/secrets/actions/new

as TEST_LIB_DEPLOY_KEY

2.2 add test

from test_private_library import random def test_random(): assert random() == 4

2.3 define the requirements.txt

git+ssh://[email protected]/hwmrocker/test_private_library.git#egg=test_private_library

pytest

2.4 add github action that installs the library

name: PyTest on: push jobs: test: runs-on: ubuntu-latest timeout-minutes: 10 steps: - name: Check out repository code uses: actions/checkout@v2 # Setup Python (faster than using Python container) - name: Setup Python uses: actions/setup-python@v2 with: python-version: "3.10" - name: Install dependencies env: DEPLOY_KEY: ${{secrets.TEST_LIB_DEPLOY_KEY}} run: | eval "$(ssh-agent -s)" echo "${DEPLOY_KEY}" | tr -d '\r' | ssh-add - mkdir -p ~/.ssh chmod 700 ~/.ssh pip install -r requirements.txt - name: Run tests run: | pytest

You can load the ssh key with those lines:

env: DEPLOY_KEY: ${{secrets.TEST_LIB_DEPLOY_KEY}} run: | eval "$(ssh-agent -s)" echo "${DEPLOY_KEY}" | tr -d '\r' | ssh-add - mkdir -p ~/.ssh chmod 700 ~/.ssh

You can't run it seperately, you need to run the whole thing in one action where you install the dependencies.

[2020-10-22] Trigger build after file change

Install the inotify-tools, for example with yay:

yay -S inotify-tools

Then you can trigger a sphix build after a file change in docs

while true; inotifywait -e close_write -r docs/; make dirhtml; end

Or to start the love2d game, but only when a lua file changes.

while true; inotifywait -e close_write -r --include ".*\.lua" . ; love .; end

The inotifywait command waits until a file is updated, in our examples until a file handle is closed after a write.

[2018-05-07] Clean pacman package cache automatically in archlinux

To clean up the pacman package cache once you can run:

# paccache -rk2

This will remove all cached packages but keep the 2 versions of any installed one. The arch docu has more useful information.

# mkdir -p /etc/pacman.d/hooks/

# vim /etc/pacman.d/hooks/remove_old_cache.hook

and fill in the following

[Trigger]

Operation = Remove

Operation = Install

Operation = Upgrade

Type = Package

Target = *

[Action]

Description = Keep the last cache and the currently installed.

When = PostTransaction

Exec = /usr/bin/paccache -rvk2

I read about this solution in the arch formus

When you update your system you can see that the hook is running. Your output should be similar to this:

# pacman -Syu

...

( 8/13) Keep the last cache and the currently installed.

removed '/var/cache/pacman/pkg/lib32-glibc-2.26-11-x86_64.pkg.tar.xz'

removed '/var/cache/pacman/pkg/linux-headers-4.16.5-1-x86_64.pkg.tar.xz'

removed '/var/cache/pacman/pkg/glibc-2.26-11-x86_64.pkg.tar.xz'

removed '/var/cache/pacman/pkg/gcc-7.3.1+20180312-2-x86_64.pkg.tar.xz'

[2018-01-05] How to fix an expired gpg key to be able to run a system update on arch linux again

When I run my first update in the new year, I had the problem that one of the gpg keys expired, and I couldn't update my system.

$ sudo pacman -Syyu error: pkgbuilder: signature from "Chris Warrick <[email protected]>" is unknown trust :: Synchronizing package databases... core 126.8 KiB 1527K/s 00:00 [#############################################################################] 100% extra 1639.8 KiB 10.0M/s 00:00 [#############################################################################] 100% community 4.3 MiB 18.0M/s 00:00 [#############################################################################] 100% multilib 168.6 KiB 23.5M/s 00:00 [#############################################################################] 100% pkgbuilder 846.0 B 0.00B/s 00:00 [#############################################################################] 100% pkgbuilder.sig 310.0 B 0.00B/s 00:00 [#############################################################################] 100% error: pkgbuilder: signature from "Chris Warrick <[email protected]>" is unknown trust error: failed to update pkgbuilder (invalid or corrupted database (PGP signature)) sublime-text 1080.0 B 0.00B/s 00:00 [#############################################################################] 100% sublime-text.sig 543.0 B 0.00B/s 00:00 [#############################################################################] 100% error: database 'pkgbuilder' is not valid (invalid or corrupted database (PGP signature))

The solution was to refresh all keys with

$ sudo pacman-key --refresh-keys

[2017-10-08] fixed netctl connection problem

Since a while i couldn't connect via netctl to a wireless network. But since a manual connect like this worked:

wpa_supplicant -B -i wlp4s0 -c <(wpa_passphrase "my_ssid" "mypassword")

I didn't bother too much. Today I read through the documentaion again and found a suggestion if the connection fails.

I added this line manually to /etc/netctl/my_profile_name

ForceConnect=yes

And a sudo netcl start home was successful \o/ I removed the line again and it still woks, strange but who cares.

[2017-08-04] kill blocking or frozen ssh connection

I googled so many times now for this combination, so add it here for future reference.

Too kill a frozen ssh connection, hit those 3 keys after another.

[ENTER] [`] [.]

[2017-05-24] mount an encrypted partition in a terminal

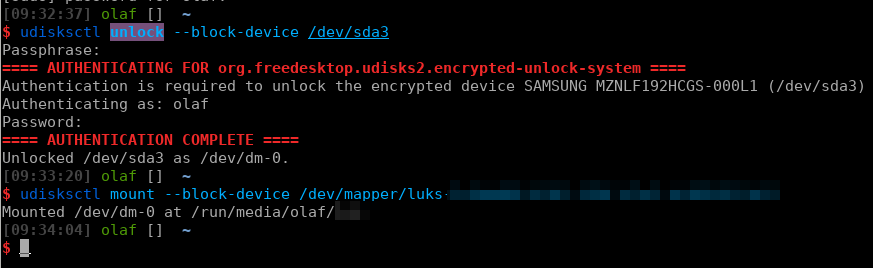

Here is a way to mount a encrypted partition that was created with gnome-disks on a system without gnome from the commandline.

$ udisksctl unlock --block-device /dev/sda3

$ udisksctl mount --block-device /dev/mapper/luks-3c6da966-4101-470f-b7e5-cb385f93fd1f

[2017-02-01] run pygame for python3 in docker

To run Xorg application in docker you just need to mount the Xorg socket into the container. First you need to disable access control so that the app from inside the container can connect to the Xorg socket with xhost +

After that you can run the container and mount the socket:

docker run -it --rm -v /tmp/.X11-unix:/tmp/.X11-unix -v $PWD:/home -e DISPLAY=unix$DISPLAY \

--device /dev/snd --name pygame3 olafgladis/python3-pygame python3 /home/app.py

The important part is -v /tmp/.X11-unix:/tmp/.X11-unix. If you want to write your pygame application in python3 you don't have to compile pygame yourself, and just use this docker container. The Dockerfile for it can be found here. For the a small demo I provided also a small snake app and a bash script to start in inside the container.

Afaik this will only work on linux.